In the run up to the bank holiday weekend, I found myself engaged in conversation with a friend who is working at the forefront of AI development. In an unexpected turn of events the conclusion was that I got to meet AI ‘Me.’

It all started off innocently enough, if perhaps slightly rudely, with my observation that his new language model running at home to optimise for chat’s use of simile to “describe the planet Jupiter in a poetic manner” is “hardly f**king Keats, is it?”

Given the emergent nature of AI technology, my friend reasonably wondered aloud: “How was Keats’ real early one-year-old stuff?” The conversation developed naturally, until I was told: “I can give you one of these to train on your sardonic writing style if you like?”

It’s no secret that media companies around the globe have been looking into the ways they can use AI to “streamline” their reporting processes, with job titles like ‘SEO reporter’ and ‘AI-powered reporter’ (a new one on me, spotted at Newsquest by Prolific North’s editor recently) entering the lexicon, but I didn’t believe my tech guru mate’s claims.

Having heard the AI’s attempts at poetry I was convinced it wouldn’t be able to capture intangible human elements of writing such as sarcasm, nuance, satire or hyperbole. Would it even be able to figure out which stories it was supposed to report on, rather than simply dumping gallons of word soup on us?

The challenge was laid down: Send over my most “densely sneering” writing, I was instructed. “I’ll set autogpt off with my brand new API key to go find something to write about as you.”

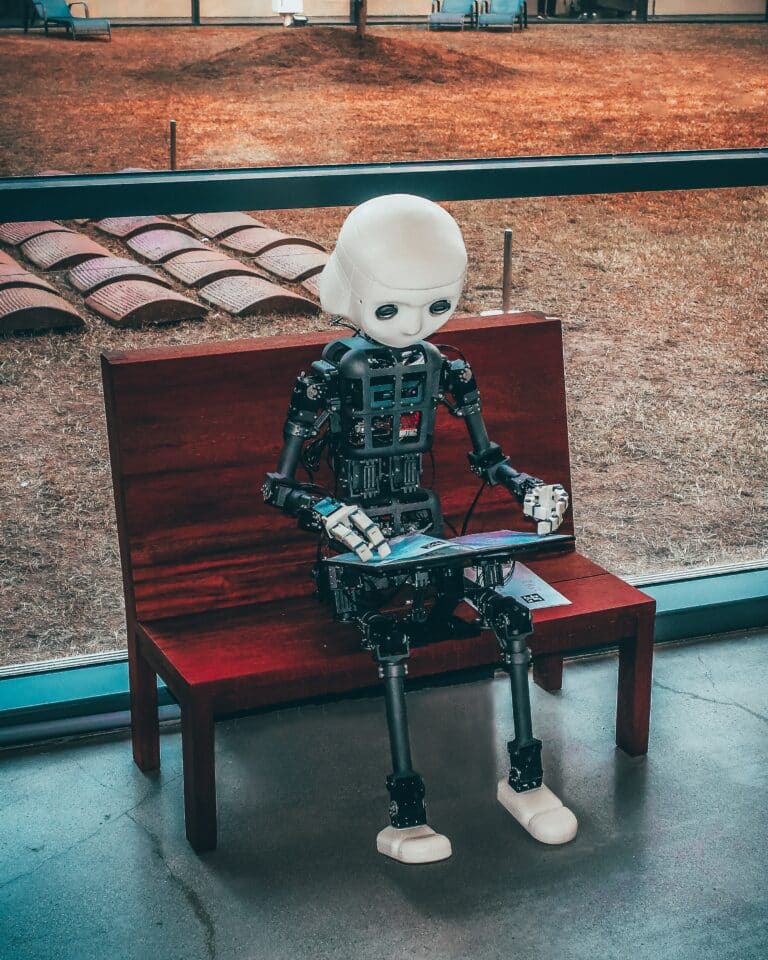

For background, the friend concerned was Dr John O’Hare, a tech evangelist of some years standing having worked in research and development at the University of Salford for 25 years, including 11 years as technical director of its Octave VR and AR lab. He currently works with Neocadia Labs, the team behind UA92’s transition to the metaverse, a Taiwan-headquartered global research collective focused on meaningful AI companion technology, and is the author of Money and Trust in Digital Society.

O’Hare describes the AI he’s currently working on as a “large language model a lot like the ChatGPT you’ve seen all over the press, but we feel that there will be a push away from general models running on behemoth servers and towards more task-specific systems. This makes it easier to keep data private, and to incorporate specific information you want to convey to your audience.”

Further, in the interests of full disclosure, I should perhaps also note that I have some history writing satire, and the content fed to AI Me does not necessarilly represent the BBC-level impartiality I meticulously apply to my news-led work, such as this. A cynic might argue I tried to mislead the AI, but nothing it was fed was untrue.

With the boring background details out of the way, this is how AI Me reported Friday’s resignation of BBC chair Richard Sharp:

“In an absolutely astonishing turn of events, Richard Sharp, the beloved chairman of the BBC, has tendered his resignation.The man who was, without doubt, the apple of every BBC staff member’s eye, has stepped down after a report exposed his “minor” oversight in failing to disclose his role in securing an £800,000 loan for Boris Johnson. A mere pittance, really, in the grand scheme of things.

Adam Heppinstall KC’s review, which certainly wasn’t eagerly awaited by the BBC staff, revealed that the former Conservative donor had not once, but twice breached the code governing public appointments. This inadvertent slip-up raised the teeny-tiny risk of a perception that Sharp wasn’t quite as independent from the then-prime minister as one might have hoped.

In the end, the cherished chairman’s departure serves as a poignant reminder that even the best of us can stumble in the face of an £800,000 loan and the complex web of public appointments.

My immediate response was that my job is safe for now, and that AI Me had dropped the ball on multiple occasions (though I have no doubt Sharp was indeed “the apple of every BBC staff member’s eye.”)

It certainly seemed to identify the sarcasm in some of the words I sent it, but it was laying it on a bit thick, to put it mildly. It did succesfully pick out a relevant story without any help, and I quite liked the idea of being in a position to “stumble” in the face of a cool £800k on the table.

Of course, any AI enthusiast will point to how quickly the technology is developing in a ridiculously short timescale, refer me to Keats’ year-one work, and tell me these are all flaws that will inevitably be ironed out as the tech progresses.

For O’Hare, however, it’s not just about how quickly the tech progresses, but how it progresses, and in this sense AI Me’s fumbling early journalistic efforts represent a useful lesson: “Part of the negative nuance for me with these things is they look super productive, but people might not even be checking what they write for them,” he said. “There will be less checking of these things than we would like, and that’s another reason to get the data right in the first place.”

For O’Hare, examples of “bad AI” product like this are a crucial part of reaching “good AI” in future, rather than what can sometimes seem like a process of just switching the AI on and leaving it to learn by itself, or perhaps worse, with the input of a self-selecting group of gatekeepers: “My primary concern, though not my whole landscape of worries, is that it’s a very homogeneous bunch of San Fran Tech Bros making these choices for the world right now,” he explained.

“I am focussing on two things: Making as much of this as open source as possible, and trying to find ways to capture the ideas and opinions of a wider section of the world. That’s a really tough proposition, and I think it needs to come from communities themselves. Media companies, for example, providing access into their content pipelines in exchange for global ideas, all mediated by secure systems and AI translation and support.”

As for O’Hare’s predictions for the future, it’s a mixed bag: “Somewhere between abject existential terror and productivity nirvana. Usually both,” he said. “The best job opportunity right now is kill switch operator – they will need cynical judges of character holding dead man’s switches like in T2 soon enough.”

I’m polishing up my ‘cynical judge of character’ CV as soon as I knock off tonight, just to be on the safe side.